The virtual machine is the go-to standard when it comes to cloud computing. But what if there was a lighter, more cost-effective, and scalable alternative to a virtual machine? A Docker container is exactly the same. Docker containers and VMs both play an important role in helping many companies thrive today, and so, docker containers vs virtual machines is a hot topic for them. Big businesses have been spending billions of dollars in the quest for the best containerization and virtual machine platforms to use. With the rapid expansion in cloud computing, small and medium-sized enterprises are also looking to integrate virtualization into their business process. As a result, it has become critical to comprehend Docker containers, virtual machines, and the differences between them.

What are Virtual Machines?

As the computing power and capability of servers grew over time, bare-metal apps were unable to take advantage of the newfound resources. As a result, virtual machines (VMs) were created, which inevitably resolved several of the computational problems. A virtual machine can be considered a program that simulates the operation of hardware resources or a computer system. In simple terms, VM allows you to run what seems to be several computers on the hardware of a single computer.

Hypervisor

Hypervisors are lightweight software layers that enable virtual machines to communicate with physical computers. These hypervisors will divide and conquer VMs, allocating CPU, RAM, and storage as required. The VM can also include the device binaries and libraries needed to run the apps. The hypervisor controls and executes the host operating system. VMs are isolated from the entire underlying system; the VM’s processes don’t interfere with the underlying processes of the host computer. As a result, virtual machines are used to carry out activities such as executing virus-infected programs and testing different applications and operating systems. A virtual machine can be described as a computer file, guest OS application, or image generated inside a computing environment known as the host. It will run programs and applications as if it were a different device, making it suitable for checking other operating systems, such as beta updates, creating OS backups, and executing software applications. At any given time, a host may have several virtual machines running. A virtual machine's main files include configuration and virtual disc files, log files, and NVRAM.

Server Virtualization

Server virtualization is a field where virtual machines are very useful. A physical server is separated into several independent and unique servers using server virtualization, allowing each server to operate its own operating system. Virtual hardware, such as processors, RAM, network interfaces, storage devices, and others, is provided by each virtual machine. Virtual machines have proven to be a common solution to a long-standing problem. Consider this: if you're running software you don't fully trust or if you're running it on an insecure network, there's a risk of attacks and malignant activities on your computer, which could disrupt the enterprise and allow entities to gain unauthorized access to information that could be sensitive. This problem is solved by virtual machines because the software that operates within a virtual machine is fully disconnected from the entire system, and this software or network vulnerabilities cannot interfere with or tamper with the host machine. This is also incredibly useful as a sandbox! Virtual machines make the task simpler. Since it is an independent object in terms of working and operations, it can be called a separate operating system within the host OS. Since the last decade, the term "server virtualization" has gained a lot of traction. But what exactly is it? It's a configuration in which a single physical server is split into several separate (unique) servers that can run independently. Despite the fact that virtual machines tend to be highly useful, they are often criticized for their struggle to provide a solid base or consistent output due to the existence of a plethora of objects, dependencies, and libraries.

Working of Virtual Machines

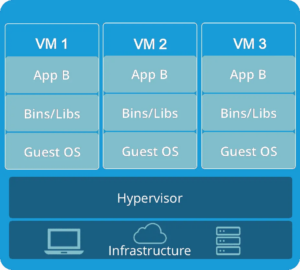

We can divide the architecture of a VM into four components:

We can divide the architecture of a VM into four components:

- The underlying host hardware system includes the server or the machine and the operating system.

- A middleman software called hypervisor comes between the underlying machine infrastructure and the hardware.

- Other VMs on the same host machine share the resources of the host and communicate with the hypervisor.

- All the software and processes that execute inside each of the VM or guest OS.

Until deploying any virtual machines, the hypervisor must be properly configured. Admins can build virtual machines from a CLI using KVM , which is an open-source technology built within Linux, especially for virtualization.

Advantages of Virtual Machines

- You can use more than one operating system inside the same physical server or host infrastructure.

- VMs help to improve the reliability of the host systems and prevent system crashes. While working on the guest OS, even if it crashes, the processes inside the host OS will not be affected.

- You can test your tapped software or any other suspected piece of code inside the VM as it provides an additional layer of security.

Disadvantages of Virtual Machines

- They are very slow, less efficient, and utilize a lot of resources.

- If the underlying host OS is weak - it has a low configuration - the VM performance will be significantly affected.

- If you try to run several VMs on a single host OS, it might lead to unstable results.

What are Docker Containers?

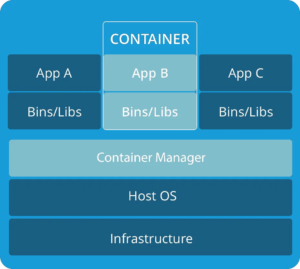

Companies in the present era want to digitally transform their businesses, but they're limited by the decision to adopt tools from a huge variety of software, cloud-based technologies, and on-premises infrastructure. Docker is a container framework that brings conventional apps and micro-services architectures built on multiple servers into an integrated and safe supply chain. Docker is a software development platform and virtualization technology that uses containers to make it simple to design, deploy, and test the application. Containers are compact, platform-independent, and contain all the libraries, binaries, configs, dependencies, etc., that are required to run the application. To put it in another way, containerized applications run the same regardless of where they are or what platform they are operating on because the container will provide an isolated packaged environment for the application. Containers are secure and stable because they are segregated. Thus, many containers can operate on the same host at the same time. Containers are also lightweight since they do not need a hypervisor to be mounted and operate directly within the host's kernel rather than via a hypervisor. Containers are a form of virtualization for operating systems. From a small application to a large microservice architecture, a single container will run it all. They're an app layer abstraction that wraps code and dependencies together. The host OS kernel, as well as the binaries and libraries, are shared by the containers. Also, containers require just a few megabytes and take just a few seconds to boot.

Working of Docker Containers

To run a program , a container requires an underlying OS that can support packages, binaries, and libraries, and a few of the underlying host's system resources. You may build a template of a customized environment using docker images, which are blueprints of your application's requirements, and create multiple reusable containers from the same Docker image. The container basically runs a snapshot of the underlying host at a specific point in time, ensuring that an app behaves consistently. To run all of the individual apps inside the container, the container uses the kernel of the host OS. Docker containers don’t sit on top of the underlying host’s hardware infrastructure but rather sit on top of the OS. That’s the reason why they are so lightweight and fast as compared to virtual machines.

Advantages of Docker Containers

- Docker containers are extremely small in size, usually a few megabytes, and you can even limit the CPU and memory usage of containers.

- Due to their small size, they are highly scalable and boot up faster.

- They are an integral component when it comes to CI/CD processes in agile development .

- Containers help to reduce the resources of IT management.

- Updates for security are reduced and happen in just a matter of seconds with only a few commands.

- Sharing code and apps with the team becomes easier.

Disadvantages of Docker Containers

- These run on top of the operating system of the underlying host machine and hence, completely depend on it.

- You need to configure containers to provide absolute security and the perfect environment to run your applications.

- You need persistent data storage solutions like volumes and mounts to back up data if the containers are deleted.

Docker Containers vs Virtual Machines

1. Support for the OS and Architecture

Inside each virtual machine, there is a host OS and a guest OS. Regardless of the host OS, the guest OS may be any operating system, such as Linux and Windows. Docker containers, on the other hand, are hosted on a single computer with a shared host OS. Containers that share the host OS are lighter and have a faster boot time. Docker containers are suitable for running several applications on a single OS kernel; however, VMs are required if the programs or services must run on different operating systems.

2. Security

VMs, with their own kernel and security features, are fully self-contained. As a result, virtual machines are used to run applications that need more privileges and protection. On the other hand, since Docker containers share the host kernel, giving root access to users and running applications with administrator rights is not recommended. As a result of container technology's access to kernel subsystems, a single infected application can hack the entire host system.

3. Portability

Since virtual machines are separated from their operating systems, they cannot be ported across platforms without causing compatibility issues. Docker containers must be considered at the implementation stage if an application is to be evaluated on multiple platforms. Docker containers are considered as packaged and contained environments that can execute programs and applications and are portable across multiple platforms because they are built on top of the underlying host OS and don’t implement their own guest OS. Also, Docker containers can be quickly installed in servers because, unlike virtual machines, they are lightweight and can be initiated and stopped in a fraction of the time.

4. Performance

Virtual machines use more resources than Docker containers since they must first load the entire operating system. Docker containers use fewer resources than virtual machines because of their lightweight architecture. In the case of VMs, resources such as processor, RAM, and I/O might not be permanently assigned to the guest OS, while in the case of containers, resource usage varies according to the load. Containers are easier to scale and duplicate than virtual machines because they don't need the installation of an operating system.

Docker Containers vs Virtual Machines: A Head-to-Head Comparison Table

| Virtual Machines | Docker Containers |

| Process isolation is on the hardware level. | Process isolation is only on the OS level. |

| They have separate OS even if running on the same physical machine. | Containers can easily share OS. |

| These requires time to boot. | Docker containers can boot in just a few seconds. |

| They are huge in size, usually in gigabytes. | These are lightweight. The size of a Docker container is usually a few kilobytes or megabytes. |

| It’s difficult to find tailored VMs. | There are tons of pre-built Docker images and containers available. |

| VMs are not portable as they depend on the underlying host infrastructure. | These are portable as they use only the OS of the underlying machine. |

| It takes time to create and initialize a VM. | It takes only a few seconds to create a container using simple commands. |

| Virtual machines require tons of resources. | They require fewer resources. |

Docker Containers vs Virtual Machines: Who's the Winner?

Docker containers vs virtual machines is actually not correct, or you can say, relevant. They aren't rivals essentially. Comparing Docker and virtual machines isn't fair because they're designed for different purposes. Docker is gaining popularity these days, but it cannot be said that it will overtake virtual machines. Despite Docker's increasing popularity, in some cases, a VM is still the better option. VMs, compared to containers, are preferred in an IT test or production environment since they operate on their own OS and do not play with the security of the host machine. However, if the applications need to be tested, Docker is the way to go, since it offers a variety of OS frameworks for rigorous testing of software or applications. Moreover, unlike VMs, Docker containers utilize the docker engine rather than a hypervisor. Docker-engine creates containers as lightweight, independent, stable, power-packed, and easily responsive units since the host kernel is not shared. Docker containers have a relatively low overhead since they can share a single OS kernel and software dependencies. Organizations are adopting a hybrid approach since the choice between VMs and containers is largely dependent on the type of the workload available. Furthermore, since the implementation is hard and running microservices is one of the major challenges, not many IT companies depend on virtual machines as their primary option and prefer to migrate to containers. However, businesses seeking enterprise-grade protection for their networks prefer Docker containers.

The Final Verdict!

Containers are not competitors to virtual machines. Rather, in many scenarios, they are complementary tools designed to match the requirements of various workloads and applications. Virtual machines are designed for software that is typically static and changes infrequently. Docker, on the other hand, is designed to be more versatile, allowing containers to be modified quickly and easily. Something you like about the article? Or don't? We'd love to know. Please share your views in the dedicated comments section below.

People are also reading: