Docker is a containerization platform and holds a vital place in the world of DevOps. It is an incredible tool instilled with tons of features. To understand the concept of Docker, it is important to get familiar with its various components as well as the architecture. In this article, we will discuss various essential Docker components as well as the Docker architecture that will help you use this platform to manage containerized applications efficiently. So, let’s begin with understanding containers and virtual machines.

Containers and Virtual Machines

There are plenty of differences between containers and virtual machines. However, the core difference between them is related to what they virtualize. Containers help to virtualize an operating system that allows multiple workload instances to run on a single OS. Whereas, in the case of virtual machines, hardware is virtualized so that multiple operating system instances can be run. Containers offer ample benefits like microservices, infrastructure separation, and application portability. As containers work on the OS kernel which is entirely a unique way to carry out virtualization, the result is faster and lightweight instances for running applications. Treading ahead, let us take a look at the advantages offered by Docker.

Advantages of Using Docker

Here are some of the key advantages that you get by using Docker:

- Consistency and isolated environment

Containers isolate themselves from other containers and applications effectively. Also, developers can easily create some of the most predictable environments using containers. The consistency of the container is maintained irrespective of its deployment in different locations. Due to this factor, the productivity of the developers increases manifold.

- Mobility

When it comes to Docker images , the deployment is consistent and portable. Additionally, containers can run on multiple operating systems, such as macOS, Linux, and Windows. The Docker image format for several containers is adopted by some of the most popular cloud providers, such as AWS (Amazon Web Services), Microsoft Azure, and GCP (Google Compute Platform).

- Cost-effective, Fast deployment

The deployment time of the containers is just a few seconds. As you are aware that several processes, including hardware set up and provisioning, are time-consuming, there is loads of extra work and baggage on your head. But when you insert a new process in a container, you can share it with other apps. This way, the deployment becomes faster.

- Flexibility

Docker provides greater flexibility in certain areas. For instance, even during the release of a product, you can easily upgrade and make desired changes to the containers. You can build, test, as well as publish images for deployment on various servers.

- Test and rollback

In case you want to go back to the previous version of a Docker image, you simply need to roll back from the current one. This process helps you to create an impeccable environment for CI/CD (Continuous integration and continuous deployment).

- Time-saving

With Docker, you can create an architecture wherein the applications inhibiting small processes can communicate through APIs with each other. This way, it becomes easy for the developers to collaborate as well as share and solve the issues in no time. Hence, when the issues get resolved, the development cycle concludes. Therefore, Docker helps you to save a lot of time. Moving on, the next section talks about the Docker engine, which makes an important component of Docker architecture.

Docker Engine

Docker Engine assists you to build, assemble, and run various applications. There are several components that help Docker Engine to run applications smoothly and are discussed as follows:

- Docker Engine REST API: It is simply an Application Programming Interface used by several applications in order to stay connected to the Docker daemon. You can use it through an HTTP client. It tells Docker Daemon what to do

- Docker Daemon: It is a background process that eases your life by managing containers, Docker images, and networks. Docker daemon accepts the requests of Docker API and puts them into processing

- Docker CLI: It’s a command-line interface that provides assistance to manage container instances. It helps to interact with the Docker daemon.

Platforms that Support Docker

You have the choice to implement Docker on various platforms that are as follows:

- Server: Multiple Linux distributions

- Desktop: Windows 10, macOS

- Cloud: AWS, IBM Cloud, Microsoft Azure, etc.

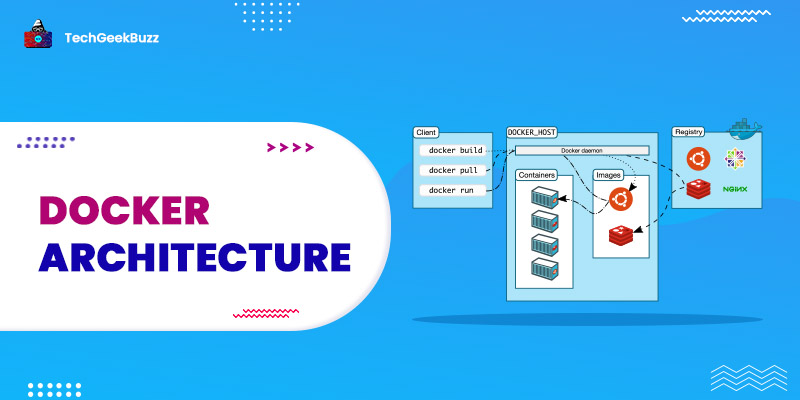

Docker Architecture

Docker architecture is composed of several essential components. We will discuss and analyze each of them one by one as follows:

1. Docker Client

With the help of the Docker client, users can interact with Docker using Docker commands and Docker API. Whenever Docker commands run, they are sent directly to the Docker daemon by the client. Also, the Docker client can create a nexus with more than one daemon. The primary work of the Docker client is to pull the images from a registry and run them on Docker Host. Below are some of the most popular commands to work with the Docker client:

- docker build

- docker pull

- docker run

2. Docker Host

The Docker Host consists of several components like images, containers, networks, storage, and daemon. The daemon takes every action related to the container. It accepts the commands through the command-line interface or the REST API. Also, it has the capability to connect to other daemons to handle the services. When requests are made by the client, the Docker daemon can pull and subsequently build container images. After pulling an image, a working model is built for the container by following certain instructions mentioned within a build file.

3. Docker Objects

Moving on, the next important component of Docker is Objects. When you work with Docker, you need containers, images, networks, and volumes. All these components are collectively identified as Docker Objects. We are going to elaborate them in detail below:

Images

Images are read-only binary templates primarily used to build Docker containers. The base layer is read-only, whereas the topmost layer can be edited. Docker images are also used to store the applications. In an enterprise, you can share container images using a private container registry. You can simply pull a Docker image from the Docker hub and use it. Moreover, to create a modified version of any docker image, you can set some instructions to the original image and you’re done. In case you want to create your own images, simply use the Dockerfile. Additionally, the Docker images are extremely lightweight packages of software. They imbibe system tools, code, runtime, system libraries, etc.

Containers

The Container is an extremely common term in the Docker world. A container is nothing but a standard unit of software that encapsulates the code and the dependencies required to run an application smoothly. Containers provide a specific environment to run your applications. Additionally, if you want to start, stop, or even delete a container, you can simply use Docker API or command-line interface Containers are usually smaller than virtual machines. Due to this, you can spin them easily, which further concludes in a better server density. Docker containers usually occupy much less space as the images have a size of some MBs only. They are capable of managing more applications, and thus containers are more flexible. It is worth noticing that Docker containers keep the applications isolated from other applications as well as from the underlying system.

Networks

Networking is important so that the containers can easily communicate with the rest of the containers as well as the Docker host. There are two different kinds of networks:

- Default Docker Network

- User-defined Network

There are some networks existing by default, namely:

- None

- Bridge

- Host

- Overlay

- Macvlan

The first two networks are members of the network stack. When we talk about the bridge network, it is not a commonly used option. The reason is scalability and constraints in network usage. Here is a detailed explanation of each network:

- None : This network driver helps to disable the entire network. Although you can’t use it to avail swarm services, it is normally used with some custom network driver

- Bridge : This is the default network driver. In case you do not mention any particular kind of driver, Bridge is automatically created. Also, if some of your applications run in standalone containers and have to communicate, this network is used.

- Host : You can use this network when there is a need to remove network isolation between Docker host and containers.

- Overlay : If you want to create a nexus amongst several Docker daemons, you can use the Overlay network and also initiate the swarm services. This network helps you to establish communication between a standalone container and a swarm service.

- Macvlan : To make the containers look like a physical device on the network, use the Macvlan network. It also helps you to assign a MAC address to the container. The Docker daemon directs the traffic to several containers through their MAC addresses only.

If you deal with legacy applications that need to be connected directly to the physical network instead of being directed via a Docker host’s network stack, use the Macvlan network. A bridge exists between the container and the host when you use either Bridge or Overlay network. On the other hand, the Macvlan network helps you to remove that bridge which ultimately provides you with the advantages of highlighting container resources to external networks, cutting the need to deal with port forwarding!

Volume (Storage)

If you want to store data in a container, you need a storage driver. It is non-persistent in nature, which simply means that when the container is not running, it will perish. For persistent storage, here are some options for you:

- Data volumes : Data volumes can help to create persistent storage. You can also rename and list volumes.

- Data volume container : If you are looking for a dedicated container to host the volume and to mount it to other containers, then use a data volume container. Moreover, you can share it with more than one container as it is independent of the application container.

- Directory mounts : It basically helps to mount a host’s local directory in a container. Use directory mounts to use a directory as a source of volume on the host machine.

- Storage Plugins : If you want to establish a connection with external storage platforms, then use storage plug-ins.

4. Docker Registry

The Docker registry is an open-source platform available under the Apache license. It is a stateless and highly scalable application. In simple words, Docker registries provide you locations from where you can easily store and download images. A Docker registry can be either public or private. Here’s what you can do with a Docker Registry:

- Own the images distribution pipeline.

- Control where the images are stored.

- Integrate your image storage.

Take a look at the below-stated commands. They are known to be the most frequently used commands while working with a Docker registry:

- Docker push

- Docker pull

- Docker run

Conclusion

Docker is an undoubtedly powerful tool that holds a great importance in the field of DevOps . Docker’s usage begins in the continuous deployment stage of the DevOps ecosystem. It brings security, simplicity, and scalability to the process of software development. Docker is loaded with tons of features. Developers can encapsulate the major components of an application like libraries and various dependencies in a single package with ease. Therefore, Docker makes it quite easy to manage and run containerized applications in different environments. In the end, we hope that this article helped you develop a better understanding of the Docker architecture and the essential Docker components.

People are also reading:

- Docker Container Commands

- What is Docker Swarm?

- Dockerfile Instructions

- How to Install Docker on Linux

- Python Client Library API for Docker

- Introduction to Docker Logging

- DevOps Certifications

- What is Docker Container Linking?

- Docker Interview Questions and Answers

- Kubernetes vs Docker Swarm

- Lifecycle of Docker Containers

- Docker Images vs Containers